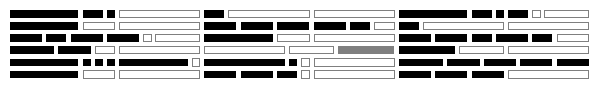

Figure 2: Illustration of the instruction alignment enforced by our

technique.

Black filled rectangles represent instructions of various lengths

present in the original program.

Gray outline rectangles represent added no-op instructions.

Instructions are not packed as tightly as possible into chunks because

jump targets must be aligned, and because the rewriter cannot always

predict the length of an instruction.

Call instructions (gray filled box) go at the end of chunks, so that

the addresses following them are aligned.